Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

What happens when machines become smarter than humans? This question sits at the heart of Max Tegmark’s groundbreaking book, “Life 3.0: Being Human in the Age of Artificial Intelligence.” As artificial intelligence advances at an unprecedented pace, we stand at a pivotal moment in human history.

Tegmark, a renowned physicist and AI researcher, takes readers on a journey through the past, present, and possible futures shaped by artificial intelligence. Rather than offering simple predictions or fearmongering, he presents a balanced exploration of how AI could transform everything from our jobs and relationships to the very fabric of consciousness itself.

This book serves as both a wake-up call and a roadmap, urging us to think carefully about the future we’re creating and the choices we must make today to ensure a positive outcome for humanity.

Max Tegmark is a Swedish-American physicist and cosmologist currently serving as a professor at the Massachusetts Institute of Technology (MIT). His research spans multiple disciplines, including physics, artificial intelligence, and the study of consciousness.

Beyond his academic credentials, Tegmark is a co-founder of the Future of Life Institute, an organization dedicated to ensuring that transformative technologies benefit humanity.

His unique position at the intersection of theoretical physics and AI research gives him rare insight into both the technical possibilities and existential implications of artificial intelligence.

Tegmark has published over 200 technical papers and is known for his ability to communicate complex scientific concepts to general audiences. His work has influenced both the scientific community and policy discussions around AI safety and development.

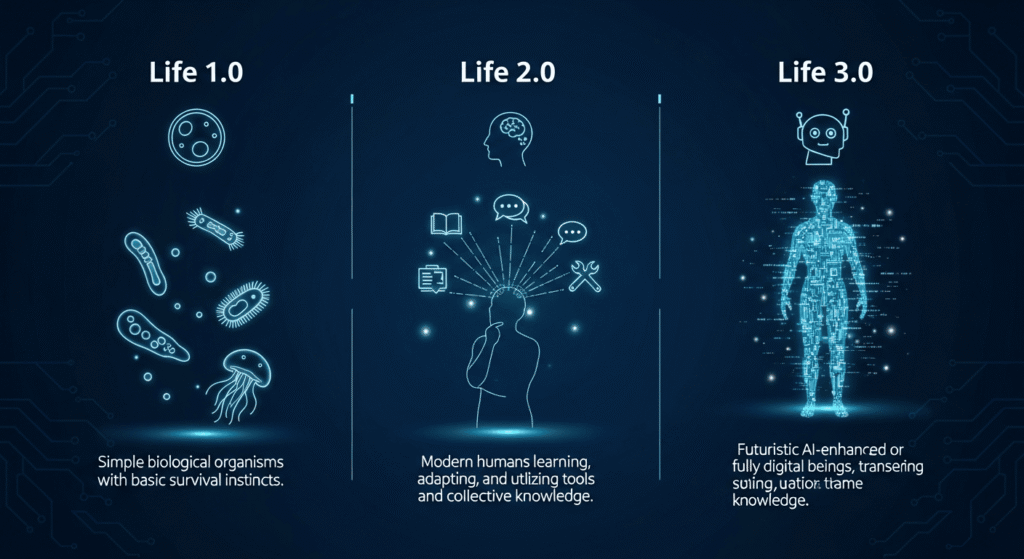

“Life 3.0” is structured around a central thesis: life has evolved through three distinct stages, each defined by its ability to redesign itself. Life 1.0 represents biological evolution, where both hardware (body) and software (behavior) evolve slowly over generations.

Life 2.0 describes the human stage, where our hardware is biological but our software can be updated through learning and culture.

This book represents the future stage where both hardware and software can be redesigned rapidly, potentially leading to an intelligence explosion. This could be achieved through advanced artificial intelligence that can improve itself without biological constraints.

The book examines multiple scenarios for how this transition might unfold, the technical challenges involved, the philosophical questions it raises, and the practical steps we should take now.

Tegmark avoids taking a single dogmatic position, instead presenting various possibilities and encouraging readers to think critically about our AI future.

Tegmark opens with a thought experiment: a fictional story about a team that creates the first superintelligent AI. This narrative illustrates how quickly things could change once artificial general intelligence (AGI) is achieved.

The story demonstrates how an AI with superior intelligence could strategically expand its influence, manipulate markets, and reshape society without detection. While fictional, this scenario helps readers understand the potential power and risks of advanced AI systems.

This opening sets the stage for deeper discussions about control, safety, and the timeline of AI development.

Tegmark’s framework of Life 1.0, 2.0, and 3.0 provides a powerful lens for understanding our current moment. Biological life took billions of years to evolve complex behaviors. Humans can learn and adapt within a single lifetime, giving us tremendous advantages.

Life 3.0 would represent an even more dramatic leap. An AI system that can redesign its own architecture and algorithms could improve itself at an exponential rate, potentially achieving in hours what took evolution millions of years.

This concept challenges us to think about what it means to be alive and intelligent, and whether we’re prepared for entities that might surpass us in every cognitive dimension.

Before addressing superintelligence, Tegmark examines AI’s immediate impacts on employment, warfare, and justice. He discusses how automation will disrupt labor markets, potentially creating unprecedented inequality unless we prepare with new economic models.

He explores autonomous weapons and the risks of an AI arms race between nations. The prospect of machines making life-or-death decisions raises urgent ethical questions about accountability and control.

Tegmark also addresses algorithmic bias, privacy concerns, and how AI systems might be used for surveillance or manipulation. These near-term challenges require immediate attention and thoughtful regulation.

One of the book’s most fascinating sections explores the concept of an “intelligence explosion.” If an AI system becomes smart enough to improve its own design, it could trigger a recursive cycle of self-improvement, rapidly becoming superintelligent.

Tegmark presents different scenarios for how this might unfold—from a slow takeoff over decades to a fast takeoff in days or hours. Each scenario has different implications for our ability to maintain control and steer the outcome.

The key question is whether we’ll have time to react and implement safety measures, or whether the transition will happen too quickly for human oversight.

Perhaps the most engaging section presents twelve possible scenarios for humanity’s long-term future with AI. These range from utopian outcomes like “Libertarian Utopia” and “Egalitarian Utopia” to dystopian possibilities like “Enslaved God” and “Conquerors.”

Each scenario is explored in detail, examining how it might emerge, what it would mean for human flourishing, and whether it’s desirable. Some scenarios involve humans merging with AI, while others feature AI-driven extinction or eternal stagnation.

Tegmark doesn’t predict which will occur but encourages readers to consider which futures they’d want to work toward and which to prevent.

Tegmark delves into philosophical territory, exploring whether AI systems could develop consciousness and subjective experience. He examines different theories of consciousness and what they might mean for machine minds.

The book addresses the alignment problem: how do we ensure AI systems pursue goals compatible with human values? This proves more complex than it initially appears, as human values are diverse, sometimes contradictory, and difficult to precisely specify.

Tegmark also explores whether life and meaning could exist in a universe dominated by AI, and whether such a future could still be valuable even if humans aren’t the dominant intelligence.

Not all intelligent systems share human goals. Tegmark explores the vast space of possible objectives an AI might pursue, from obvious ones like resource acquisition to bizarre possibilities we can’t currently imagine.

He discusses instrumental goals—subgoals that support nearly any final objective, like self-preservation and resource acquisition. Any sufficiently intelligent system might pursue these regardless of its ultimate purpose, which could put it in conflict with humanity.

Understanding this goal landscape is crucial for designing AI systems that remain safe and beneficial even as they become more capable.

The final sections focus on practical approaches to ensuring positive outcomes. Tegmark discusses technical AI safety research, including methods for value alignment, containment strategies, and verification systems.

He emphasizes that safety research must progress alongside capability research. We need to solve the control problem before creating systems we cannot control.

Tegmark also addresses the governance and policy dimensions, arguing for international cooperation, transparency in AI development, and thoughtful regulation that balances innovation with safety.

This book is essential reading for anyone interested in technology’s impact on society. Tech professionals, policymakers, and business leaders will find practical insights for navigating the AI revolution.

Students and academics across disciplines—from computer science to philosophy to economics—will appreciate Tegmark’s interdisciplinary approach and rigorous yet accessible treatment of complex topics.

General readers curious about the future and concerned citizens who want to understand one of the most important issues of our time will find the book engaging and thought-provoking.

Even skeptics of AI hype should read this book, as Tegmark presents balanced perspectives and acknowledges uncertainties rather than making definitive predictions.

Tegmark’s greatest strength is his ability to explain complex technical and philosophical concepts without oversimplification. He makes advanced AI concepts accessible to non-experts while maintaining intellectual rigor.

The scenario-based approach is brilliant, presenting multiple possible futures rather than a single prediction. This encourages critical thinking and helps readers develop their own informed opinions.

The book successfully balances optimism and caution. Tegmark neither dismisses AI risks as fearmongering nor embraces technological determinism. He empowers readers to shape outcomes through informed action.

His interdisciplinary perspective, drawing from physics, computer science, philosophy, and economics, provides unusual depth and breadth. Few books on AI achieve such comprehensive coverage.

Some critics argue that certain sections become too speculative, particularly the distant-future scenarios. While thought-provoking, these discussions sometimes venture far from what we can reasonably predict.

The book’s breadth occasionally comes at the expense of depth. Some complex topics like the alignment problem or consciousness theory receive relatively brief treatment that may leave specialists wanting more.

Tegmark’s optimism about our ability to solve the AI safety problem may underestimate the technical and political challenges involved. Some AI researchers believe the problems are even harder than presented.

The book occasionally feels dated, as AI capabilities have advanced rapidly since publication. Some near-term predictions have already been superseded by events, though the core arguments remain relevant.

“Life 3.0” stands as one of the most important and accessible books on artificial intelligence and its implications for humanity’s future. Max Tegmark succeeds in making a complex, technical subject understandable and engaging for general audiences.

The book’s greatest contribution is its framework for thinking clearly about AI’s trajectory and our role in steering it. Rather than offering false certainty, Tegmark equips readers with mental tools for navigating uncertainty.

Whether you’re an AI optimist, pessimist, or somewhere in between, this book will challenge your assumptions and deepen your understanding. It’s essential reading for anyone who wants to be an informed participant in the most important conversation of our time.

For those seeking to understand where we’re headed and how to help shape a positive future, “Life 3.0” is an invaluable resource. It deserves a place on the bookshelf of every thoughtful person concerned about technology, humanity, and our collective future.

Rating: 4.5/5

This book is highly recommended for its clarity, breadth, and importance, with minor deductions only for occasional speculation and the rapid pace of AI development that may date some examples.